Beep Boop Beep: Explaining Query Fan-Out and Its Application in Search Everything Optimization (SEO)

Recently, I had a client ask me about ranking for a particular keyword. I kind of laughed. Not because they did anything wrong but because I still have these re-occurring dreams (and wishes) that search actually still works this way.

For those of you that have some familiarity with search marketing – you know that’s what “used” to happen. Choose a keyword and go. That WAS the basis of search engine optimization (SEO). In my early SEO days, our bank wanted to rank for “best bank in Gainesville, GA” and with a little magic – placing that keyword XX times on a web page, tricking the system by getting backlinks to that page, and convincing someone to list you on a top 10 list and boom! #1 Rankings Here We Come! Even my first ever published article on SEO was in 2006 featured on the cover of ABA’s Bank Marketing Magazine and how to track ROI. Thanks to my growing expertise in SEO at the time (and this little thing called the Great Recession), I left financial services for my first agency job dabbling in everything from SEO to PPC and all ads and UX in between. But, what I (and our digital team) at the time quickly became known for was helping clients rank for everything from lab beakers to poultry vaccines.

Then – and for years following, Google’s algorithm was a game. If you knew you knew and if you didn’t you lost. However, since 2020, algorithms have become much wiser and now truly look for those that have real expertise, real experience, and trusted authority on a given topic. So, if you’re going to rank for something you “actually” have to be a leader and have to “actually” be an expert and “actually” know what you’re talking about.

With the growing adoption of AI by Google and growing interest / adoption in other LLMs like ChatGPT and Perplexity, SEO is now about proving you can command real authority on a topic.

As an aside, we all know that AI is new for all of us. Every time I complete a new query in ChatGPT or Google VEO, I imagine the system sounds like R2D2 and makes random sounds externally like when R2 is trying to open a door. Then, when it returns void or can’t complete the query (which happens sometimes especially when doing something requiring Agentic AI), it screams like R2 as well (I still laugh every time I hear that sound).

Back to the topic, anytime you start a new query, what is ACTUALLY happening? And how does that information help us when it comes to search marketing?

To explain how this – and other LLM platforms work when it comes to queries and searches, it all starts with query fan-out.

What Is Query Fan-Out?

Google recently rolled out AI Mode (congrats to my buddy Eric Beatty who lead creative at Google for this roll out). This new feature utilizes Google Gemini (Google’s own AI) to answer a user’s query.

While similar to ChatGPT, Gemini is more directly tied to Google’s Search algorithm than other LLM platforms. Google’s AI mode uses a technique they now call “query fan-out” to provide its response (they even got a patent on the structure!).

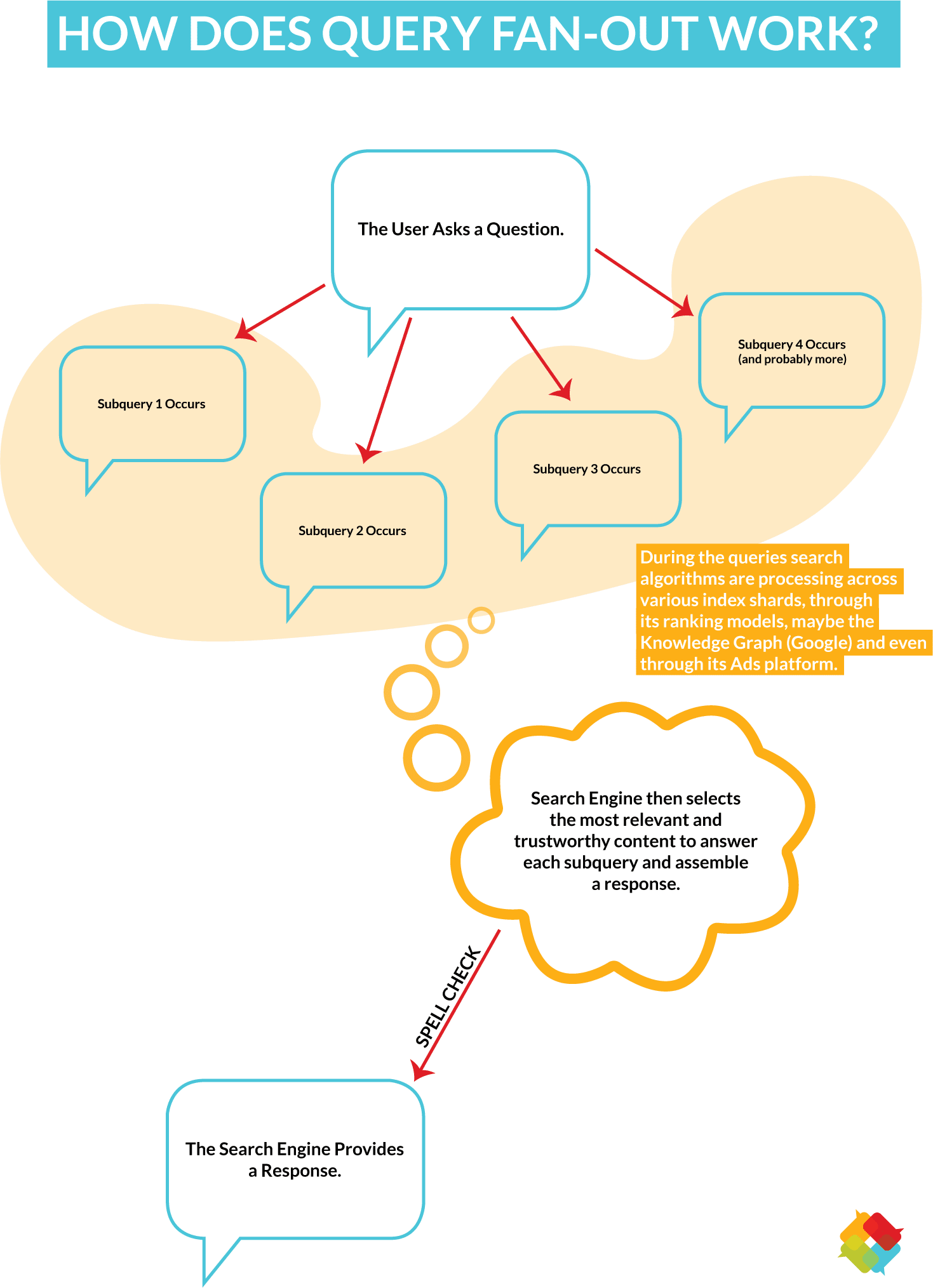

Consider this: you type into your Google AI Mode search “What medical office in Gainesville, GA offers the best treatments for weight loss?” AI mode will then divide this into multiple subqueries like “best treatments for weight loss,” “medical office in Gainesville, GA,” “medical weight loss in Gainesville, GA,” and more. That is Query Fan-Out. Google uses this technique in its AI Overview and AI Mode responses deconstructing the user’s initial query into multiple simultaneous subqueries to gather detailed information before returning a cohesive response via its AI.

By utilizing this model, Google further explores the user’s actual intent in their search and other related topics in parallel. If you think about it, it makes a lot of sense. When you ask a human a question, neurotransmitters are released to create the neural connections to form a response. Our brains shift to a search and process mode focused on the information received and accessing memories that we have to generate potential answers. That’s not much different than how it works with query fan-out. Google’s AI mode takes the question and groups it into multiple semantic (language) groupings so the system can provide a response. Those groupings are the “subqueries” that are asked for the system to provide a response. So rather than traditional search where a single set of words drives the response, with AI mode the words are grouped so Gemini can use its “memory banks” (or other websites across the internet) to connect dots to provide a response. This happens in a series of steps:

Step 1: Analyze the question.

Google Gemini reviews the query inclusive of the type of information requested and/or problem to be solved.

Step 2: Generate sub-queries.

The query is then broken into multiple, contextually-related subqueries.

Step 3: Perform parallel searches.

These subqueries are run as simultaneous searches utilizing its existing algorithms.

Step 4: Gathers information.

Google selects the most relevant and trustworthy content to answer each subquery.

Step 5: Assembles a response.

Gemini then combines the data from these subqueries into a cohesive response citing sources.

These steps are simple, but the process a search engine goes through may not seem so obvious. Between steps 3 and 4 there is a LOT that is happening. Google is reviewing its ranking and models to ensure accuracy in the response, it’s considering the Knowledge Graph, it’s looking at Ads on those topics, and it’s considering indexed shards and spell checking. There’s a lot that happens very quickly. If you’re a more visual learner, check out this infographic:

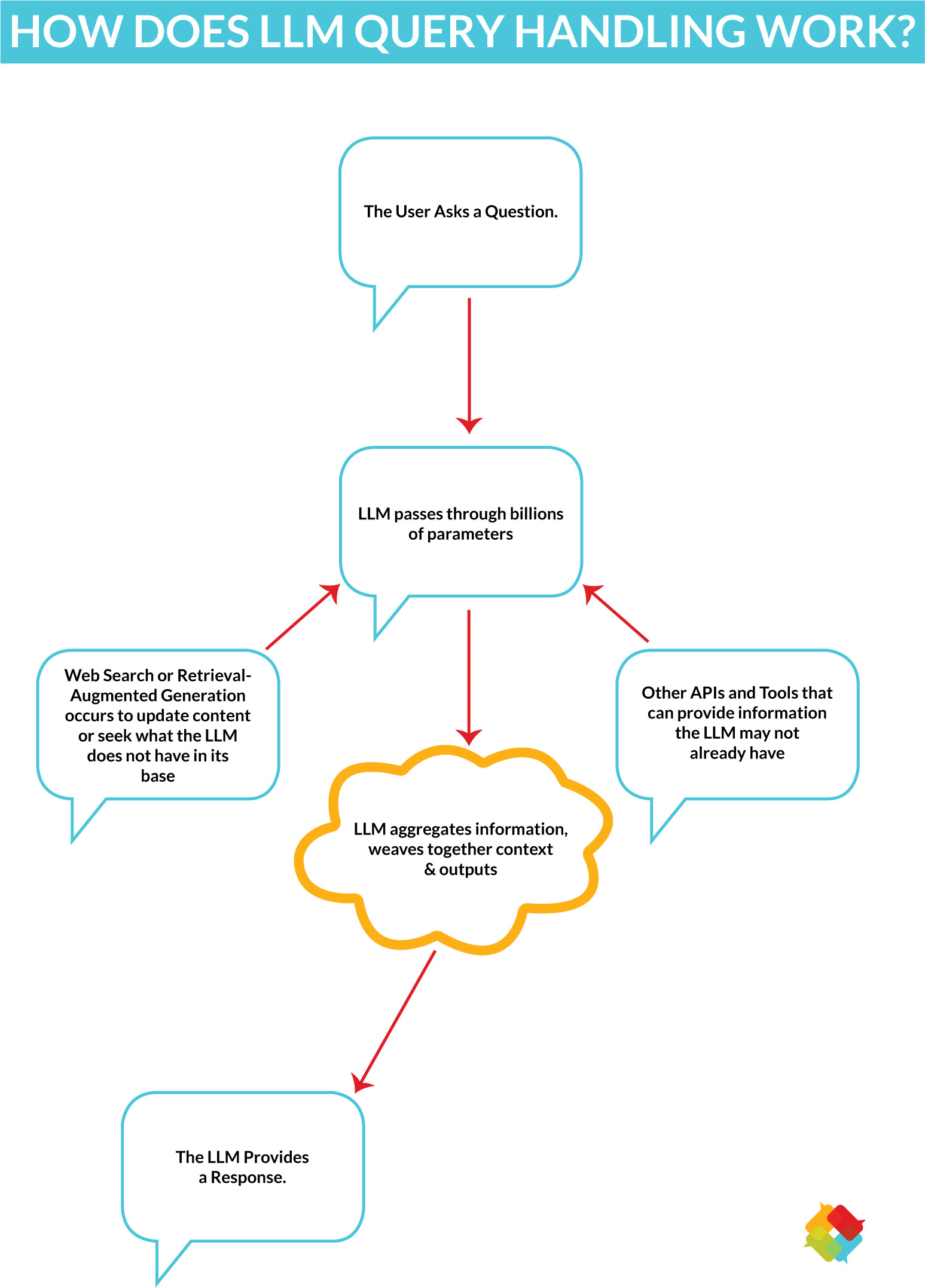

Just for comparison’s sake, this is DIFFERENT than how ChatGPT and other LLMs function. The only time that most LLMs complete something close to a query fan-out is IF the LLM doesn’t already have some base knowledge of the topic being requested. Those occur as extensions after the LLM has went through its parameters. Think about it this way – in search, the query fan-out is focused on gathering information from external sources. From an LLM like ChatGPT, processing occurs FIRST within its subset of information and then a query fan-out may occur to pull information back into its set of information. All of that happens to hold data WITHIN the LLM – where Google’s supposed process is all external. Here’s an example of a platform like ChatGPT’s processing:

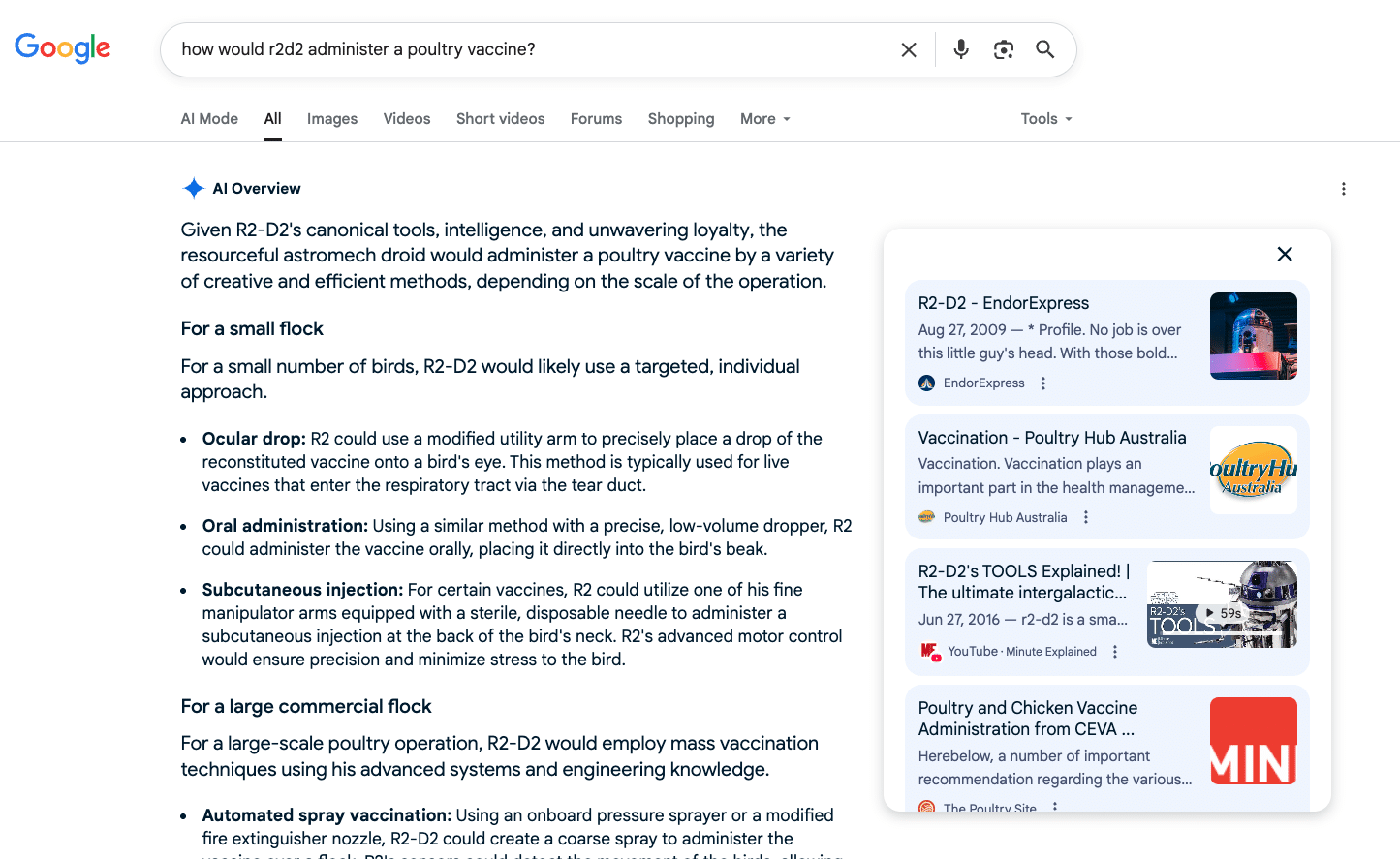

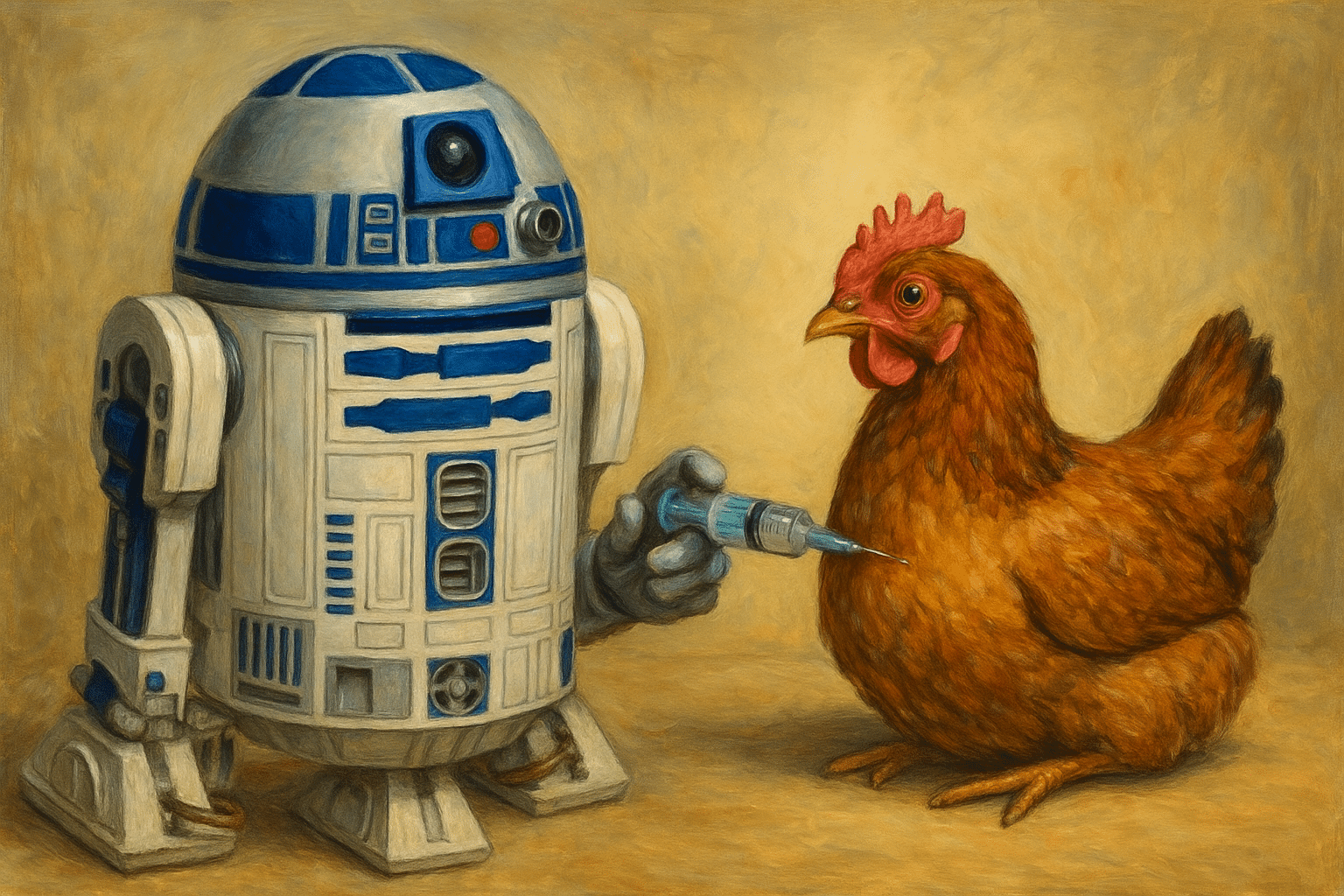

R2-D2 and Poultry Vaccines

Ok, so it’s DragonCon weekend and I thought it would be best if we got a little weird to show how this works in real life. So, I took two of the topics mentioned above: everyone’s favorite fun-loving astromech and the other obvious choice, poultry vaccines. I asked Google “how would R2-D2 administer a poultry vaccine?” Of course someone would’ve written an article about this……not at all. So, the search forced Google Gemini to look at disparate topics and try to answer the question. The query that I asked was likely broken down into a myriad of subqueries like “how are poultry vaccines administered,” “how does R2-D2 operate,” “what knowledge does R2-D2 have,” “how are single birds vaccinated,” “commercial poultry vaccination,” and a large number of other subqueries. If you look specifically at the sources Google chose they relate to the types of tools R2D2 has, how poultry vaccination is done, vaccination robots, and more pulling this information from websites, YouTube, and Wookieepedia (the Star Wars Wiki).

The response I received is amazing: I’ve placed a screenshot so you can get the gist but here’s the entirety of Google’s AI Overview response:

Given R2-D2’s canonical tools, intelligence, and unwavering loyalty, the resourceful astromech droid would administer a poultry vaccine by a variety of creative and efficient methods, depending on the scale of the operation.

For a small flock

For a small number of birds, R2-D2 would likely use a targeted, individual approach.

- Ocular drop: R2 could use a modified utility arm to precisely place a drop of the reconstituted vaccine onto a bird’s eye. This method is typically used for live vaccines that enter the respiratory tract via the tear duct.

- Oral administration: Using a similar method with a precise, low-volume dropper, R2 could administer the vaccine orally, placing it directly into the bird’s beak.

- Subcutaneous injection: For certain vaccines, R2 could utilize one of his fine manipulator arms equipped with a sterile, disposable needle to administer a subcutaneous injection at the back of the bird’s neck. R2’s advanced motor control would ensure precision and minimize stress to the bird.

For a large commercial flock

For a large-scale poultry operation, R2-D2 would employ mass vaccination techniques using his advanced systems and engineering knowledge.

- Automated spray vaccination: Using an onboard pressure sprayer or a modified fire extinguisher nozzle, R2-D2 could create a coarse spray to administer the vaccine over a flock. R2’s sensors could detect the movement of the birds, allowing him to adapt and ensure even coverage.

- As in real-world automated systems, R2 would create a “digital twin” of the flock to track individual birds and ensure proper vaccine dispersal.

- Drinking water dispenser: R2 could use his scomp link to interface with the farm’s watering system. He would then use his internal storage compartment to add the correct amount of reconstituted vaccine to the birds’ water supply, using skimmed milk powder to neutralize any chlorine.

- In-ovo injection (at the hatchery): As the ultimate “astromech” droid, R2 is an expert in technical processes and could operate with machines in a hatchery setting. In this scenario, he would work with automated equipment that injects the vaccine directly into a developing embryo.

For special or emergency situations

When faced with a challenge, R2’s quick-witted nature and ability to improvise would come to the fore.

- Remote-controlled sprayer: R2 could deploy a remote-controlled, all-terrain robotic device (similar to a modified probe droid) to navigate difficult or hazardous areas of a chicken house. The device would carry and spray the vaccine while R2 monitored and directed it.

- Lariat for difficult birds: R2-D2 has been shown to use a lasso-like appendage. While unlikely for administering a vaccine, R2 might use a gentle version of this tool to calm and secure a particularly skittish bird for an individual injection.

Genius, right? So obvious how R2 would actually do this. And to make it even better, I asked ChatGPT to render me a photo of this occurring. Apparently, R2 even uses some sort of modified human hand to administer said vaccines (see AI-generated Dutch still life painting).

With the technique of query fan-out explained, how does this relate to you and your business?

Application of Query Fan-Out

Consider the content that you post or related to your business on every channel online. Your website. YouTube. Instagram. LinkedIn. Reddit. Quora. Podcasts. Name it. Each of these channels represent who you are, what you do, what you stand for, and the quality you provide. Now, what content is present? Is the content on these channels SO GOOD that your community, your industry, Google would consider you A or THE expert on that topic? How much of yourself and your knowledge can you share so people believe you are the expert in your space? That’s the game of search in today’s marketplace.

In order for you to exercise a strategy that would allow you to, let’s say, “participate” in query fan-out you have to build a strategy around your offerings that give Google and other LLMs enough material so they KNOW that you are an expert. In reality, this has been the game of organic search marketing all along. Create amazing content for your consumers. Make sure others recognize your awesomeness and link back to you or talk about you. Make sure you’ve got everything organized so Google sees these things and recognizes what people know about you. You win. And while yes, there are strategies behind this, that’s the general structure.

From a practical standpoint, place one of your product or service pages at the center of map. From there, what information would need to be provided to PROVE that YOU – yes YOU – are the expert in that service in the geographic area you work in? Build a content plan around that service that includes not just your website but video, social media, stories and interviews by others about you, podcast interviews, and more. Cover all the bases you can with your service page serving as the hub – the page you want people to visit if they want to work with you – and all the other pages and content pointing in its direction. Your page should only point to other items when strategically relevant and that service page should be the best d@#$ page it can be with video, links and content for days proving that YOU are the only organization they should work with for that product or service. That – THAT – is the core of modern SEO inclusive of any “search” platform (Google, ChatGPT, Perplexity, other LLMs, etc.). So if you hear the acronyms AEO, GEO, AIO, etc. just know it all comes back to this. And yes – there are some tricks out there right now to game the system in some LLMs to generate “responses” for your brand but all of us who have been in SEO for more than a few days know that game will be short-lived.

If you decide to take the short cut with a pop-up “GEO” firm – good luck. Early in the days of SEO I had clients come to my prior agency asking for help so they could get off Google’s black list for these sorts of tactics. And we were able to help them – but not before they had to lay off 75% of their staff and all take pay cuts. That’s a dangerous, very dangerous game to play for quick bucks and short-term success. Smart businesses play the long game. So, your real focus should be to just be the expert in your space. Just be the one that everyone else looks to for information in your industry. That’s the real challenge.

If this sounds like a lot of work, doing it right and white hat / ethically – it is. And that’s the work our digital marketing team at Forum does daily. We’re constantly working to improve on these strategies and make sure that the strategy we build meets the real goal of our clients.

A Few Definitions

Agentic AI – Agentic AI refers to AI tools that make decisions and can take actions to achieve a human objective. They can accomplish a specific goal. For instance, some agentic AI platforms can create videos, others can mimic the human voice, and some can write code on your behalf. These are the tools that you’ve seen that animate people in old photos, or create crazy AI videos like Google VEO 3 and others.

Index Shards – Index shards are smaller partitions of a larger index that store information in subsets to help scale processing and data power. Can also be referred to as index clustering.

Knowledge Graph – A knowledge graph is a structured network of real-world entities and their relationships, organizing information in a way that machines can understand and process, enabling better search results, AI-driven insights, and more relevant results.

LLMs – Large Language Models, or LLMs, are advanced artificial intelligence systems that are trained on a massive quantity of text information and are built to understand, generate and process human language and communications. OpenAI (ChatGPT) is likely the most prominent of these platforms but Perplexity, Google Gemini, Meta AI, Grok, and others all classify as LLMs.

Retrieval-Augmented Generation – Also referred to RAG, Retrieval-Augmented Generation is a technique to improve LLMs by combining information retrieval with generation. It works like this – a user issues a query and a retriever will go and collect information on the topic to bring it back. Those chunks of text are then inserted into the model’s prompt along with the user query so the model has fresh information to pull from. The LLM then uses what it retrieved and its internal knowledge to generate an answer. If you ask an LLM a question that is time sensitive like “What features are on the latest version of the iPhone?” without RAG it may say “I don’t know, but typically iPhones improve certain features…” and list those features. With RAG, the LLM can respond direclty with the latest information. In short RAG takes LLM data + data pulled from search engines to provide reasoning and the most recent facts.

Back